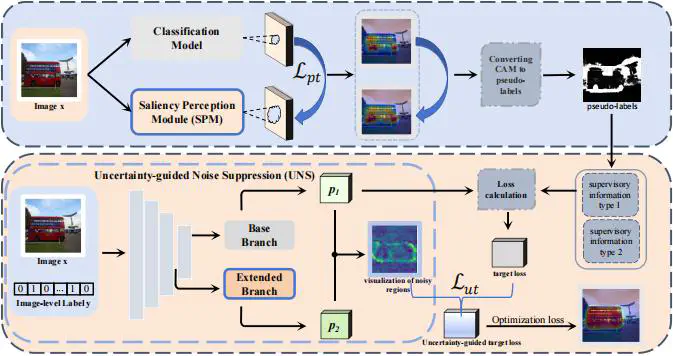

Weakly Supervised Semantic Segmentation via Saliency Perception with Uncertainty-guided Noise Suppression

Model Architecture

Model ArchitectureAbstract

Weakly Supervised Semantic Segmentation (WSSS) has become increasingly popular for achieving remarkable segmentation with only image-level labels. Current WSSS approaches extract Class Activation Mapping (CAM) from classification models to produce pseudo-masks for segmentation supervision. However, due to the gap between image-level supervised classification loss and pixel-level CAM generation tasks, the model tends to activate discriminative regions at the image level rather than pursuing pixel-level classification results. Moreover, insufficient supervision leads to unrestricted attention diffusion in the model, further introducing inter-class recognition noise. In this paper, we introduce a framework that employs Saliency Perception and Uncertainty (SPU), which includes a Saliency Perception Module (SPM) with Pixel-wise Transfer Loss (SP-PT), and an Uncertainty-guided Noise Suppression method (UNS). Specifically, within the SPM, we employ a hybrid attention mechanism to expand the receptive field of the module and enhance its ability to perceive salient object features. Meanwhile, a Pixel-wise Transfer Loss is designed to guide the attention diffusion of the classification model to non-discriminative regions at the pixel-level, thereby mitigating the bias of the model. To further enhance the robustness of CAM for obtaining more accurate pseudo-masks, we propose a noise suppression method based on uncertainty estimation, which applies a confidence matrix to the loss function to suppress the propagation of erroneous information and correct it, thus making the model more robust to noise. We conducted experiments on the PASCAL VOC 2012 and MS COCO 2014, and the experimental results demonstrate the effectiveness of our proposed framework. Code is available at https://github.com/pur-suit/SPU.