Model Architecture

Model ArchitectureAbstract

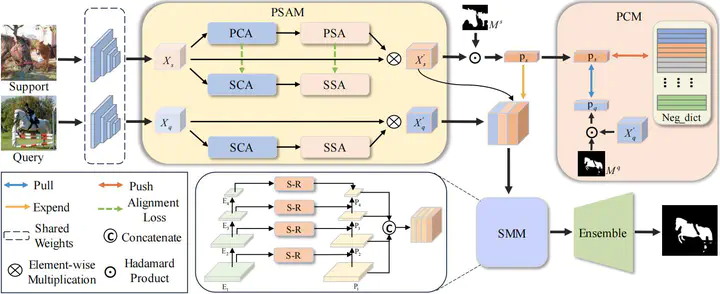

Few-shot semantic segmentation aims to learn a generalized model for unseen-class segmentation with just a few densely annotated samples. Most current metric-based prototype learning models utilize prototypes to assist in query sample segmentation by directly utilizing support samples through masked average pooling. However, these methods frequently fail to consider the semantic ambiguity of prototypes, the limitations in performance when dealing with extreme variations in objects, and the semantic similarities between different classes. In this paper, we introduce a novel network architecture named Prototype-guided Salient Attention Network (PSANet). Specifically, we employ prototype-guided attention to learn salient regions, allocating different attention weights to features at different spatial locations of the target to enhance the significance of salient regions within the prototype. In order to mitigate the impact of external distractor categories on the prototype, our proposed contrastive loss has the capability to acquire a more discriminative prototype to promote inter-class feature separation and intra-class feature compactness. Moreover, we suggest implementing a refinement operation for the multi-scale module in order to enhance the ability to capture complete contextual information regarding features at various scales. The effectiveness of our strategy is demonstrated by extensive tests performed on the PASCAL-5$^i$ and COCO-20$^i$ datasets, despite its inherent simplicity. Our code is available at https://github.com/woaixuexixuexi/PSANet.