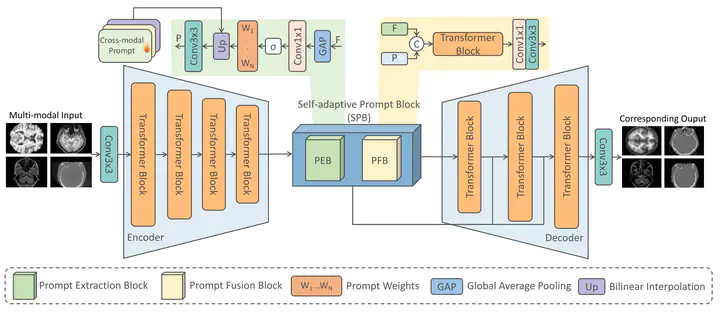

MedPrompt: Cross-Modal Prompting for Multi-Task Medical Image Translation

Model Architecture

Model ArchitectureAbstract

The ability to translate medical images across different modalities is crucial for synthesizing missing data and aiding in clinical diagnosis. However, existing learning-based techniques have limitations when it comes to capturing cross-modal and global features. These techniques are often tailored to specific pairs of modalities, limiting their practical utility, especially considering the variability of missing modalities in different cases. In this study, we introduce MedPrompt, a multi-task framework designed to efficiently translate diverse modalities. Our framework incorporates the Self-adaptive Prompt Block, which dynamically guides the translation network to handle different modalities effectively. To encode the cross-modal prompt efficiently, we introduce the Prompt Extraction Block and the Prompt Fusion Block. Additionally, we leverage the Transformer model to enhance the extraction of global features across various modalities. Through extensive experimentation involving five datasets and four pairs of modalities, we demonstrate that our proposed model achieves state-of-the-art visual quality and exhibits excellent generalization capability. The results highlight the effectiveness and versatility of MedPrompt in addressing the challenges associated with cross-modal medical image translation.